In my previous post, I had talked about how we to ported an existing repository code from Azure SQL to Amazon Aurora.

This is a two-series post where I will throw a little bit more light on the intention behind it and what we were trying to achieve.

Background

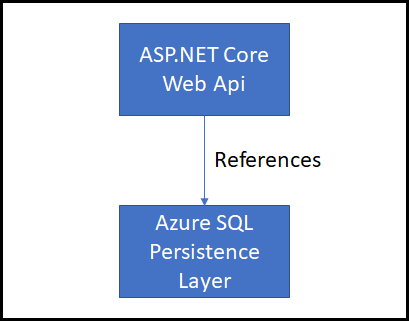

We had a small ASP.NET Core solution which had two primary components:

- ASP.NET Core Web API hosted on Azure Kubernetes Service (AKS)

- An Azure SQL Database based persistence layer

However, we now had a new requirement to deploy the same little application to Amazon Web Server (AWS).

The persistence layer was required to be hosted on Amazon Aurora using MySQL. How we ported the persistence layer was explained in my previous post here.

An important thing to note here is that this exercise started as a PoC (Proof-of-Concept) and ended as a PoC. The actual implementation turned out to be a lot different due to organization Governance model, regional limitations, and cross-cutting concerns such as authentication, logging, build and deployment pipeline, etc. However, I feel the approach is still worth a mention and may be useful in many other use-cases.

The Challenge

This new requirement brought up an interesting challenge for us, where, now the same code-base was required to be deployed twice to different cloud providers and with different database providers.

The aim of the POC was come with a solution which meets below goals:

- The solution needed to be simple and should not require major code refactoring.

- The developers should be able to easily develop and run the application across both SQL Server and MySQL.

- The developers should be able to run the integration tests across both the DB providers.

The Solution

To achieve this, we came up with an idea of using an application settings to differentiate the deployment. The below diagram explains the proposed solution.

This exercise started as a PoC and ended as a PoC. The actual implementation turned out to be a lot different due to organization Governance model, regional limitations, and cross-cutting concerns such as authentication, logging, build and deployment pipeline, etc.

Step 1: Project restructuring

We started with restructuring our code as below:

- The abstractions (repository interface) was moved into a separate project.

- The SQL server implementation which implemented the abstraction was moved into a separate project.

- A new MySQL server repository was introduced which again was an implementation of the interface.

Step 2: Add additional profile in launchSettings.json

To ensure we can run the application against both the DB providers during the development, we added a new profile for in launchSettings.json. The two profiles after the changes were:

- local-azure: To run the solution against Azure Sql implementation.

- local-aws: To run the solution against Amazon Aurora implementation.

Updated launchSettings.json looked similar to below:

Step 3: Add a local application setting file for each profile/ deployment

Next, a local application settings file was added for both profiles, local-azure and local-aws. Each application settings file (appsettings. local-azure.json and appsettings.local-aws.json) had two settings:

- Deployment: Azure or AWS

- Connection String: A local connection string to connect to either SQL Server or MySQL.

Step 4: Updates to Startup.cs – Dependency Injection

Last, but not the least, the Startup.cs was updated to inject right dependencies based on the “Deployment” setting.

As you can see in the above code, we chose which module/ DB provider to load at the run time using the deployment setting through the Dependency Injection.

To use the repository we needed to simply inject the IRepository interface and call the respective operation. (like Add, Update, Get, etc)

That’s it! This allowed us to develop and run the application across both the DB providers.

In my second and final series of this post, I will talk in the detail about our integration tests setup which arguably was little more than trivial.

Photo by Caleb Jones on Unsplash

Leave a Reply