Welcome to my blog on .NET, cloud, and everything else

-

Using Azure Cosmos persistence with NServiceBus

This post explains how you can use NServiceBus and Azure Cosmos DB persistence together for scalability and reliability.

-

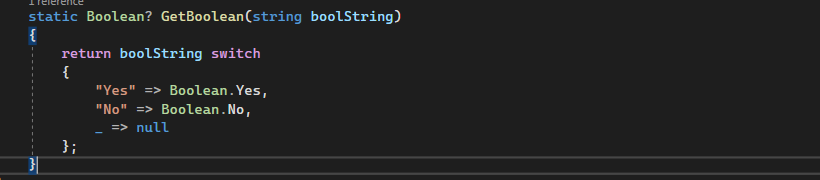

Gotchas with switch expression

This post talks about a bug in Rider and Roslyn analyzers when we refactor the switch-case to switch expression with Nullable default type.

-

Updating Cosmos DB document schema

This post explains the pain-points of updating schema in NoSQL and how you can update Cosmos DB schema using custom JSON serializer.

-

Custom JSON serialization with Azure Cosmos DB SDK

This post explains how you can use custom JSON serializer settings with Cosmos DB using Newtonsoft JSON and System.Text.Json.

-

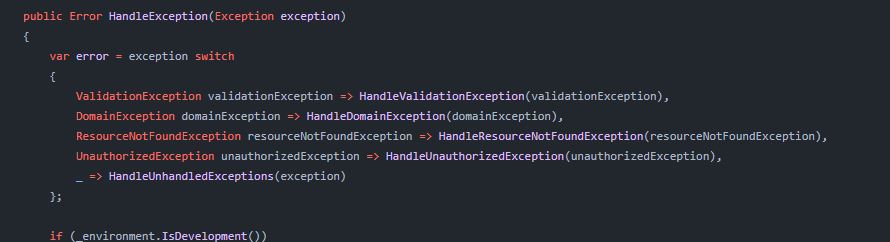

An opinionated way to consistent Error Handling in ASP.NET Core

In this post, I have explained an opinionated way to achieve consistent error handling for your ASP.NET Core through Error response object.

-

Loading certificate in Azure App Service for Linux

In this post, I have explained how you can load a certificate through code in Azure App Service for Linu

-

Distributed lock using PostgreSQL

This post talks about why you may need a distributed lock and how you can create a distributed lock using PostgreSQL.

-

A poor person’s scheduler using .NET Background service

This post explains how can create a simple scheduler using .NET BackgroundService without using external libraries or serverless functions.

-

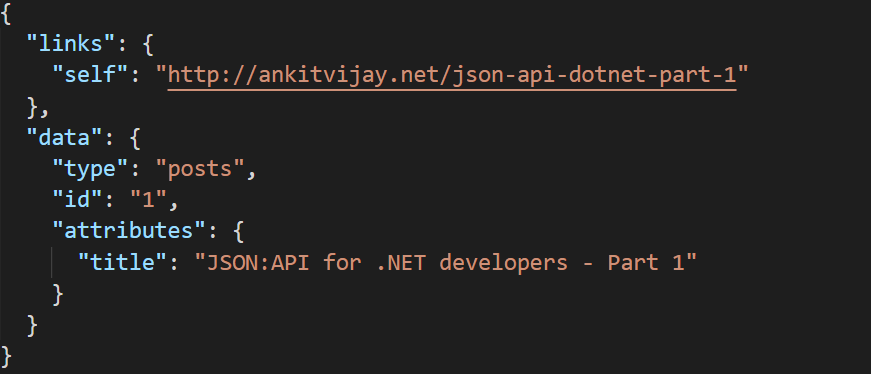

JSON:API for .NET developers – Part 1

This post is the first of my new series on JSON:API for .NET developers. JSON:API has been around for a while but has not been widely known in the .NET world.

-

Passing correlation id across requests

This post describes importance of passing a single correlation id across requests in microservices and how we an achieve this in .NET.

Like, what you read? Subscribe!